One of the most compelling arguments in support of religion is the totally pragmatic one. What does it matter if religion is false, if god is totally made up, if faith is only a placebo effect, or even if it’s all ultimately just a scam to separate you from your hard-earned money? In the end isn’t all that matters that it makes you happier and more successful?

Reporters and opinion writers propagate this pragmatic justification of religion every day. It is actually difficult to get through any newspaper issue without encountering yet another article or op-ed touting the benefits of religion and faith. Here is an example of the typical kind of happiness claims put out there most every day in popular media:

Research suggests that children who attend church perform better in school, divorce less as adults and commit fewer crimes. Regular church attendees even exhibit less racial prejudice than their nonreligious peers. (see here).

These articles invariably cite scientific studies and statistics to support their claims. But those claims frequently go far beyond study design or the conclusions made by the scientists involved.

These articles invariably cite scientific studies and statistics to support their claims. But those claims frequently go far beyond study design or the conclusions made by the scientists involved.

There are many ways that studies are misused by advocates to advance their causes or market their products. So we must all be very savvy when we see broad, sweeping conclusions being supported by narrow scientific studies, particularly by social science studies.

To help you to recognize these manipulations, following are some of the typical falsehoods and distortions used by advocates to misrepresent science or to promote bad science.

Failing to Mention the Negatives

Studies show that chocolate supplies 11 grams of fiber! Wow, maybe we should all eat chocolate to get our fiber! But to get that 11 grams of fiber from chocolate you have to consume a whopping 600 calories. Likewise, studies tout selected admirable ethical qualities of religious people, but fail to mention other studies that show, for example, that religious people are far more likely to support torture, guns, violence, and drone attacks.

Failing to Mention Better Alternatives

Another way advocates misuse studies is by failing to mention far better alternatives. For example, the chocolate industry fails to mention that practically any fruit, vegetable, or grain is a far healthier source of fiber. That may not be their responsibility, but if they are implying that you should eat chocolate in order to get your fiber, they are lying. Likewise, advocates often tout the morality of religious people, implying that religion is the only way to achieve these values. But you don’t need to consume 600 calories to get your fiber and you do not need religion along with all its negative characteristics to be a good person.

Failing to Quantify the Benefits

Advocates will often claim a benefit without quantifying it, thereby giving a false impression of how important it is. For example, religion advocates may cite studies showing that fewer religious people go to prison, without mentioning that this difference is inconsequentially tiny.

Misrepresenting Statistics

Advocates often misrepresent statistics. If they are trying to magnify a small difference they report it as a percentage or ratio. If they are trying to exaggerate a tiny difference in a huge population, they cite the numerical difference. For example, religion advocates might claim that “secular people are twice as likely to commit suicide as religious ones.” Sounds fantastic right? But this could very well mean that out of a population of 10,000 people, 1 religious person committed suicide and 2 non-religious people committed suicide. Not quite as alarmingly persuasive when presented that way.

Using Bad Indicators

In epidemiology, an indicator is a specific test that can be used to measure a more general condition. But a bad indicator tells one little or nothing about the general trait being evaluated. For example, religious advocates typically conclude that believers are “happier” based upon highly questionable measurements such as divorce rate which have little to do with happiness. As we all know, married people can be far more miserable than divorced ones.

Failing to Prove Causation

Most clinical studies are observational, or association studies. That is, they simply show that two variables are both observed in or associated with a given population. This is valuable information. But proving that those two variables are directly related to each other is quite difficult. Proving causality between one and the other is even more difficult. Even if two things seem to be related, they may be indirectly associated through some third thing called a confounding factor. For example, a study may show that church-goers cheat less on their spouses. That is merely an association. But advocates use that observed association to claim that church attendance promotes ethical behavior even though the researchers themselves never made that claim. However, it may well be that church attendance and marital fidelity are not directly related at all, let alone that church attendance causes fidelity as advocates claim. The most we could say based on the research is that, for whatever reason, people who go to church are also more likely to be people who have fewer affairs. Maybe the reality is that unattractive people tend to go to church in a desperate and futile attempt to start an affair. Attractiveness may the just one confounding factor here. That we cannot determine or even imagine what the confounding factors may be is not proof of causality.

Failing to Consider Reporting Bias

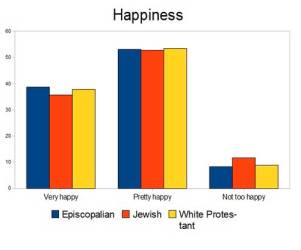

Many of the narrow social studies used to make sweeping claims rely upon self-reporting. However, self-reporting is incredibly unreliable. People intentionally or unintentionally report all kinds of things in all kinds of ways for all kinds of reasons. For example, men are likely to brag about their infidelity while women are likely to conceal it. Self-reports are poor measures of the relative level of infidelity between the sexes. Likewise, religious people are deeply invested in the fiction that religion makes them happier and are very likely to report that they are even if they are totally miserable.

Failure to Mention Study Limitations

Years ago, upon reading commonly cited statistics that “98% of women report incidents of sexual abuse,” radio host Dr. Laura Schlessigner did what a good consumer of information should do. On-air, she called the scientist who conducted the study being referenced to support this claim. The researcher was eager to express her frustration with all of the advocacy groups citing her research without mentioning that her study narrowly targeted an extremely at-risk population. Dr. Laura then called the head of one of those women’s advocacy groups employing this scare-tactic and asked her why she knowingly misrepresented this research. When confronted, the head of the organization stood firm, saying that anything is justified if it raises awareness of real issues faced by women.

Choosing the Wrong Measurement

Even if we could measure happiness, it should not be assumed that happiness is the best or only goal. Believing that global warming is a hoax probably does make one sleep sounder. Allowing your kids to eat pizza at every meal will probably result in fewer observed food-related tantrums. But clearly these measures of happiness do not justify accepting those positions. Likewise with religion.

Selective Skepticism

We tend to do pretty good at being skeptical about things we disagree with. But when it’s something we’re predispositioned to like and want, like chocolate or religion, we tend to set all skepticism aside and whole-heartedly embrace any arguments in favor, no matter how much of a stretch they may be. The happiness-arguments supporting religion are definitely one area in which our society demonstrates far too little critical scrutiny, as evidenced by the huge number of happiness claims repeated in major publications with virtually no skeptical analysis.

Baby With the Bath Water

Please, please, please don’t conclude from this that you can never trust social studies and that these studies never have any value at all. Association studies are very valuable. We need to know when things are observed together in a given population. However, you should be a smart consumer of these studies and understand the ways that advocates misuse study results to contrive claims that advance their cause. This is particularly important when we are predisposed to believe those claims. When in doubt, look past the claims made by advocates or even by seemingly objective “science reporters” and read the typically more careful and restrained conclusions reported by the scientists who conducted the studies. With the Internet at our fingertips today that is not usually very difficult to do.

Very much comes under the “bad science” heading. The sad fact is that too many won’t and don’t take the advice of your last couple of sentences… easier just to agree with anything that reinforces their views and increasingly these days berate anyone who dares to differ.

LikeLike

Pingback: An Alternate View of Automation | figmentums