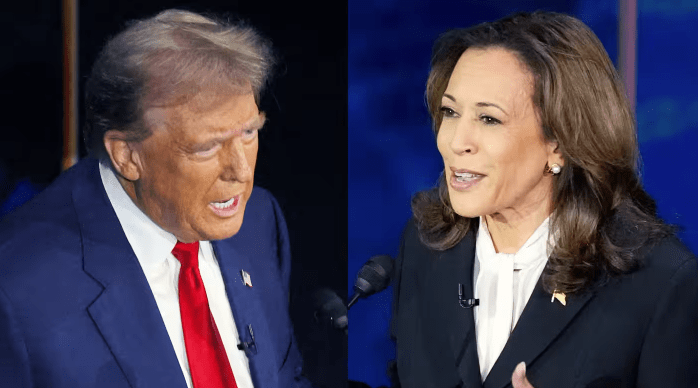

Last night many of us saw the 2024 Harris-Trump Presidential Debate on ABC. What any clear-minded viewer should have seen was a stark contrast between an eminently smart, qualified, and ethical woman with a passion for public service who was forced to enter into debate with a stupid, disqualified, and completely unethical wannabe dictator to whom public service is no more than a grift in service of an unbounded appetite for self-aggrandization and settling personal scores.

Let’s be perfectly clear, while some of Trumps’ statements might have contained some arguable grain of truth, or might be sane-itized in some fashion to sound coherent, he was substantively lying or mistaken about practically everything he asserted.

That is not to say that, as someone who is generally Liberal on most issues, I was perfectly satisfied with Harris’ performance or positions on every issue. Contrary to what some on the Right might like to think or claim, she is certainly not my personal wet-dream of a candidate.

Following are some of the particular things that disappointed me about Harris’ performance and positions in last-night’s debate:

- On guns, Harris forcefully emphasized her support for guns and for the 2nd Amendment. I would have liked her to vow to begin reducing the number of guns in private hands and to rationally reinterpret the calamitous 2nd Amendment, or better yet support repealing it completely.

- On the military, Harris emphasized her desire to ensure we have “the most lethal military in the world.” I would prefer that we aspire to having the most efficient, effective, and ethical military in the world.

- On the pullout from Afghanistan, rather than merely touting what a great job we did, I would have liked to have seen Harris express some of the deep sense of loss that any military commander-in-chief should feel when they lose soldiers and commit to doing everything in her power to prevent the loss of life on both sides while acknowledging the inevitable losses that will occur in military conflicts.

- On Gaza, I would have very much preferred if Harris stopped playing both-side-isms on this issue and differentiate herself from the Biden entrenchment of unqualified support and unlimited military funding for Israel, with only platitudes for the victims in Gaza.

- On abortion, while it was good that she clarified that post-birth abortions are not actually a thing, Harris left hanging the issue of late-term abortions by so obviously avoiding it. It is disappointing that she, like most of the abortion community, fuel unwarranted paranoia about the frequency of late-term abortions by failing to address it head-on with actual facts.

- On fracking, Harris’ support felt like pandering to Pennsylvania. I would have preferred that she accompany her support for fracking with an initiative to improve the industry by helping it to reduce the unnecessarily high levels of methane emissions throughout the entire fracking pipeline.

- On taxation, I would have preferred that Harris stick with the more progressive Biden taxation levels and other fiscal policies for the ultra-rich.

- Harris disappointed me by failing to adequately call out Trump’s insistence that a tariff is not a tax. She did refer to it indirectly as a Trump-tax, but she was not clear that she was referring to the tariff. The moderator did a better job of fact-checking Trump on this very important and insistently repeated false claim.

All of this is not to nitpick Harris, but to point out that her positions aren’t entirely in line with my own on important issues that I care about deeply. But presidential candidates hardly ever align completely with our own views on every issue. And often it can be a difficult calculus to decide which to support.

Harris is also very moderate leader. I wish we could elect a more positively radical leader to tackle things like climate change and wealth inequality more aggressively and more quickly. But radical leaders, even those who are radical in positive ways, can’t get elected in a healthy pluralistic democracy. And we certainly should never seriously consider electing a dangerously radical leader like Donald Trump ever again.

So regardless of how your issue-by-issue calculus works out, and regardless of your deeply felt priorities, it is not even a legitimately debatable question about who to support in this election. This election is about whether you are willing to recklessly risk your nation and your future on someone who is utterly corrupt and destructive, merely because you like some of his positions or don’t like some of hers.